What Is a ReAct Agent?

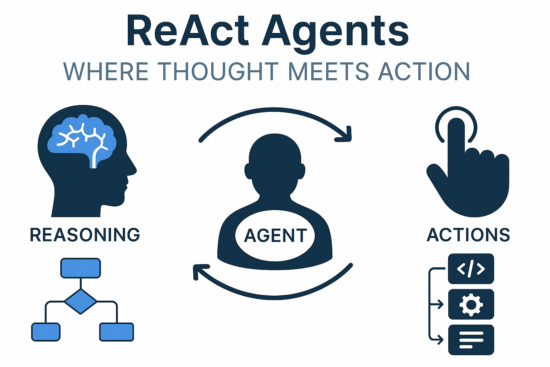

The ReAct framework stands for Reason + Act, and it represents a paradigm shift in how large language models (LLMs) like GPT-4 interact with the world.

Traditional prompting models were static—they could generate text, summaries, or answers, but they couldn’t:

-

Take real-world actions

-

Use tools or APIs

-

React to dynamic environments

-

Improve based on intermediate results

Enter ReAct: a framework that allows LLMs to reason step-by-step and take actions in a loop, just like humans do when solving problems.

Think of it as giving LLMs not just a voice, but a pair of hands—and a mind that can plan ahead.

The ReAct Loop: How It Works

The ReAct architecture introduces a loop involving four key stages:

-

Observation

The agent receives input from the environment (or the user). -

Thought (Reasoning)

The agent reflects on the current state, goal, and potential steps forward. -

Action

The agent chooses and executes an action (e.g., search, call an API, retrieve from memory). -

Observation Update

The environment returns feedback or data. The agent updates its context and loops again.

This continues until:

-

The goal is reached

-

A stopping condition is met

-

The agent is instructed to halt

A Simple ReAct Example

Let’s say you want the agent to answer:

“Who is the current Author of The Frontier Newsletter and what’s their educational background?”

A naive model might guess or hallucinate.

But a ReAct agent will:

Question: Who is the Author of The AI Frontier Newsletter and what is their educational background? Thought: I need to know who the current Author of The AI Frontier Newsletter is. Then I need to find their educational history. Action: Search("Current Author of The AI Frontier Newsletter") Observation: The Author is Avinash Kumar. Thought: Now I need to find Avinash Kumar's educational background. Action: Search("Avinash Kumar education") Observation: Avinash Kumar studied data science at BITS Pilani, MBA at IIT Patna, and PG Diploma at IIIT Delhi. Final Answer: The current Author of The AI Frontier Newsletter is Avinash Kumar. He studied studied data science at BITS Pilani, MBA at IIT Patna, and PG Diploma at IIIT Delhi.

That’s reasoning and action—ReAct in motion.

How ReAct Differs from Tool-Use-Only Agents

Many agents today can call APIs or plug-ins. But they’re often reactive:

-

They get a user query

-

Call a tool

-

Return a result

There’s no planning, backtracking, or evaluation.

ReAct agents, however:

-

Think multiple steps ahead

-

Choose between multiple actions (e.g., Search vs. Math vs. Memory)

-

Reflect on prior actions and revise their plan

-

Reduce hallucinations by validating results

In essence: ReAct agents feel more human in how they solve problems.

Real-World Use Cases for ReAct

1. Web Search Agents

Query > Think > Search > Evaluate result > Repeat if needed

-

Avoids outdated answers

-

Dynamically refines the query

2. Data Analysis

Think > Call a data API > Review chart > Decide next metric to pull

-

Great for financial dashboards or product analytics

3. Legal/Policy Advisors

Read clause > Identify issue > Search for precedent > Summarize findings

-

Useful for compliance workflows

4. Customer Support Agents

Understand query > Check ticket DB > Cross-reference docs > Draft response

-

Dynamic, personalized, and up-to-date replies

5. Developer Assistants

Read error > Suggest fix > Search StackOverflow > Offer refined solution

-

Less hallucination, more grounded help

Tools & Frameworks That Support ReAct

ReAct is not just a theory—it’s used across several production-grade tools and platforms:

-

LangChain: Offers built-in ReAct agent type for toolchains

-

OpenAI Function Calling: Can be wired into ReAct-style loops with planning

-

AutoGen (Microsoft): Implements ReAct-style reflection loops in chat agents

-

CrewAI: Enables agents with roles and memory in ReAct workflows

-

LangGraph: Graph-based planning for agents using reasoning-action cycles

If you’re building multi-step, autonomous systems, ReAct is the foundation.

Design Patterns in ReAct Agents

Here’s how ReAct agents structure their logic internally:

| Step | Prompt Format | Example |

|---|---|---|

| Thought | Thought: I need to… |

I should look this up on Wikipedia |

| Action | Action: [ToolName](“input”) |

Action: Search(“GDP of Japan 2022”) |

| Observation | Observation: [Tool output] |

Observation: $4.3 trillion |

| Final Answer | Answer: … |

Answer: Japan’s 2022 GDP was $4.3T |

This structured reasoning format becomes promptable behavior, and enables modular, scalable logic.

Common Challenges with ReAct Agents

While powerful, ReAct agents come with engineering challenges:

1. Prompt Length

Each loop iteration adds to the token count. Token limits matter!

✅ Tip: Use memory compression, summaries, or short context windows.

2. Infinite Loops

Without clear exit rules, agents can loop endlessly.

✅ Tip: Define stop conditions like “after 3 tool uses” or “if confidence > 90%”.

3. Tool Selection Confusion

If too many tools are available, agents may misuse them.

✅ Tip: Use tool descriptions, few-shot examples, or gating logic.

4. Latency

Multiple API calls per step can increase response time.

✅ Tip: Batch where possible or cache common observations.

Future of ReAct Agents

ReAct agents represent a key stepping stone toward true autonomous AI.

In the near future, we’ll likely see:

-

ReAct agents controlling IoT devices

-

Running multi-agent teams with specialized tools

-

Building self-correcting LLM workflows

-

Integrated ReAct agents in every productivity suite

ReAct is the bridge from stateless LLMs to situational AI intelligence.