When we think about deploying AI systems, we usually focus on the sexy parts: training large models, building smart agents, chaining prompts, or integrating APIs.

But there’s a hidden iceberg underneath it all: operational stability.

And if you’re serious about running AI in production, especially autonomous agents, fine-tuned models, or dynamic LLM workflows—you need something rock solid to manage deployments, rollbacks, configurations, and scaling.

That’s where GitOps comes in. And trust me—using GitOps with AI feels like upgrading from riding a bicycle to piloting a spaceship.

Let’s dive into why GitOps is a game-changer for AI ops, what real-world patterns are emerging, and how you can actually pull it off based on lessons I’ve learned working on AI-driven systems.

First, What Is GitOps (Really)?

At its core:

-

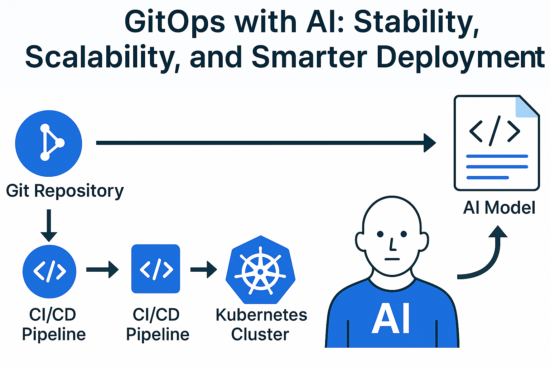

GitOps is a way of managing infrastructure and application deployments using Git as the single source of truth.

-

Changes are made declaratively (you describe the desired state, not how to get there).

-

Automation (via CI/CD pipelines or controllers like FluxCD) syncs the system to match Git.

If you want to change your app? Make a Git commit, not a kubectl command.

If something goes wrong? Rollback by reverting a Git commit.

Simple. Reliable. Scalable.

Now, imagine applying that discipline to AI workloads.

Where GitOps Meets AI

AI systems aren’t static anymore. They’re dynamic, messy, and often chaotic:

-

Prompt templates evolve.

-

Model versions change.

-

Fine-tuning hyperparameters update.

-

Retrieval pipelines get tweaked.

-

Agent graphs need reconfiguration.

Without a solid system, you end up with:

❌ Manual errors

❌ Untraceable model drift

❌ Dev-prod environment mismatches

❌ Failed agent upgrades

GitOps fixes this by treating AI assets as code.

What You Can GitOps in AI Systems

Here’s what we now manage via GitOps in some of the real-world AI deployments I’ve worked on:

| Asset Type | GitOps Managed? | Example |

|---|---|---|

| Model artifacts (model cards) | ✅ | Hugging Face checkpoints, versioned LLMs |

| Prompt templates (YAML/JSON) | ✅ | System prompts, few-shot examples |

| Agent configurations | ✅ | LangGraph flows, AutoGen roles |

| Retrieval indexes (embeddings) | ✅ | Pinecone/Weaviate configs |

| Inference pipelines (K8s YAML) | ✅ | Kubernetes manifests, Helm charts |

| Hyperparameters / Finetune configs | ✅ | Training configurations |

In short: everything that defines the AI system’s behavior gets versioned, reviewed, and deployed through Git.

Practical Patterns: How It Looks in Action

Let’s walk through an example:

1. Updating a LangGraph agent

You want to update an agent’s behavior from:

-

Prompt V1: “Answer succinctly.”

-

Prompt V2: “Provide detailed answers with examples.”

Instead of editing a config directly on a server or cloud console:

-

You create a pull request modifying the prompt YAML.

-

Git triggers validation workflows (linting, sanity checks).

-

ArgoCD detects the Git change.

-

Kubernetes auto-redeploys the agent container with the new config mounted.

Result:

Versioned prompt updates, safe rollbacks, and full auditability.

2. Upgrading an LLM or embedding model

Imagine moving from text-embedding-ada-002 to text-embedding-3-small.

Instead of:

-

Updating random API calls

-

Changing ops scripts manually

You:

-

Change a single line in a Git repo config.

-

GitOps tools sync the embedding service deployment.

-

Blue-green rollout validates no traffic is broken.

No downtime. No chaos. Just controlled evolution.

Benefits You Actually Feel

Here’s what shifts when you apply GitOps to AI:

Traceability: Know exactly who changed what in your agents, prompts, or models—and when.

Rollbacks: Mistakes happen. Reverting a commit is way better than debugging a broken endpoint at 2AM.

Auditability: Critical for regulated industries like healthcare, finance, and government AI deployments.

Collaboration: Data scientists, ML engineers, and DevOps teams can collaborate through Git PRs—not ad-hoc messages.

Reliability: Every change follows a pipeline. No surprises when promoting from dev → staging → production.

Lessons Learned the Hard Way

1. Start small. You don’t need GitOps for everything on day one. Start with model configs or prompts.

2. Use human-readable formats. Store prompts, agent flows, and configs in JSON/YAML so they can be easily diffed in Git.

3. Automate validation. Before allowing a config change to merge, validate basic sanity checks: model compatibility, JSON schema validation, etc.

4. Separate concerns. Different repos or branches for:

-

Prompts

-

Agents

-

Pipelines

-

Model artifacts

Keeps things clean.

5. Think like software, not research. When deploying AI in production, treat models and agents as software releases, not experiments.

What’s Next: GitOps + MLOps + AIOps

We’re entering a future where:

-

LLMOps platforms auto-trigger GitOps flows.

-

Autonomous agents update their own configs through PRs (yes, self-improving agents).

-

Git becomes the nervous system for dynamic AI ecosystems.

Bringing GitOps discipline to AI systems will become as normal as unit testing in software.

If you’re building serious AI products—this is a shift you can’t afford to ignore.